Google is the biggest search engine today. According to SEMrush, Google has 4.3 billion users worldwide and has a market share of 92.24%. That means out of about 4.3 billion internet users worldwide, 92.24% of them use Google instead of other search engines. But do you know that Google’s ranking algorithm is far from the best? Why is Google’s search engine biased?

In the course of this article, you’ll understand better the flaws of the Google search engine.

Contents

4 Reasons Why Google’s Search Engine Is Biased

Here, we’ll highlight the major flaws of how Google’s search engine ranks content on the search engine and potential solutions.

1. Google Ranks Article Based On Site Authority

One main reason why Google’s search engine is biased is that it ranks articles based on ‘website authority’.

Let me break it down.

Imagine that a small site publishes a very detailed guide on how to ride a bicycle, for example, on a blog whose content is about only bicycles. If Forbes, not known as an established authority in bicycle content, writes a similar article with the same keyword, they will rank higher than that small site on Google. Why?

Because Google thinks Forbes has been around for a long time and it’s a well-known brand unlike a small site, therefore, they are most likely to rank higher than other small sites competing for the same keywords, irrespective of the quality of content.

This is a major problem with the search engine. The Google algorithm is biased and favors established brands over other websites.

Potential Solution: Each article should compete fairly with others on the search engine regardless of past history, or perceived authority of the source domain.

2. Google Pretends To Care So Much About Quality

The most common message you get from Google Search Central YouTube channel, is to publish quality content for your readers.

Quite frankly, there is nothing better than writing quality content for human readers. That’s why I personally, hate those AI writing tools.

Anyway, there is more to quality content than avoiding AI writing tools. Whatever the case, google is right – publish only top-quality content that satisfies specific queries.

However, it may interest you to know that smaller sites can hardly outrank bigger sites for top-competitive keywords no matter the quality of their content.

Again, it boils down to perceived sitewide ‘authority’ over the quality of individual content match for match.

Potential Solution: The quality of individual content must take priority over any nonsensical sitewide gratification.

Related: How Image SEO Works

3. Google Likely Apportions Traffic Quota Per site

Have you ever got stuck to a certain range of search engine traffic even though you keep publishing new content regularly?

I have experienced this firsthand and I know a couple of people who have. Also, I’m sure there are thousands (if not millions) of blogs out there experiencing the same thing.

In my experience, I was stuck to 12-15k monthly traffic for about a year even though I kept publishing new content. To put things in perspective, about 120 articles were fetching me 12-15k monthly traffic and after publishing about 80 more articles, I am still getting the same range of search engine traffic.

How on earth is that possible? It is unrealistic.

A colleague had a similar experience. He had 15-20k traffic from just 50 articles. Man was very excited and published 150 more articles. Guess what?

The range of his search engine traffic did not increase.

Many more people out there experience a similar situation.

What that simply means is that Google pegs a website to a certain number of search traffic until they get certain signals, which in most cases, are ‘authoritative’ links, before organic traffic starts increasing in an upward trajectory.

This is very biased.

No matter how good some articles are, they would never get the deserved traffic because Google likely sets an overall quota of traffic (which changes over time) per site shared across all articles.

Potential Solution: There should be no limit to how much traffic an article should get on individual sites.

4. Sites are ‘Punished’ Unnecessarily

Granted, it is very important that some spammy sites are de-indexed from search engines. Also, unsafe sites should be ‘punished’.

I support that and I understand it.

But do you know that Google ‘punishes’ sites with good content for trivial cases?

In my experience, Google deranks some articles I built backlinks to for whatever reason.

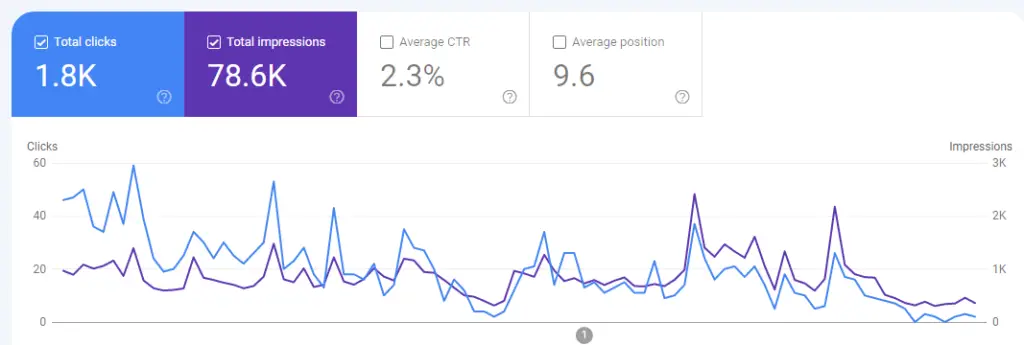

See an example below of this one article I built a link to.

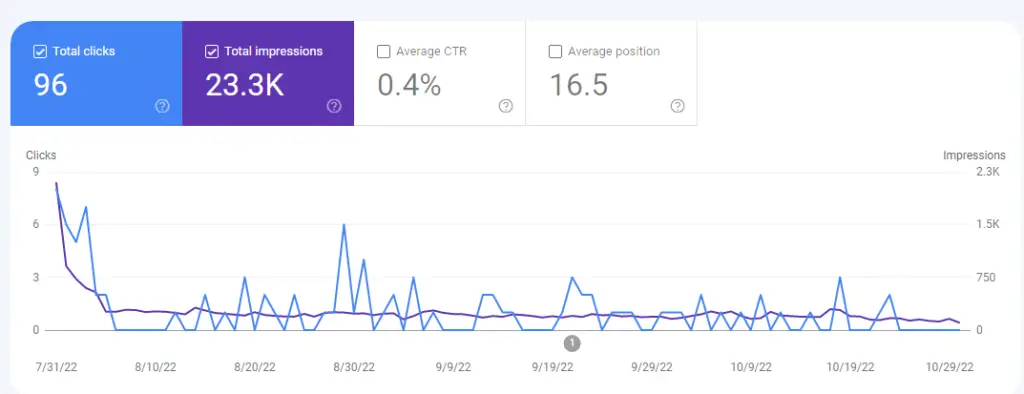

I thought it was a coincidence until I observed the same thing with another article I built a link to. See below.

Mind you, the links built were built on reputable sites with great content.

What I think is that Google thinks the links built are not natural, or paid, hence the deranking.

But in reality, it makes no sense.

Don’t get me wrong. I don’t mean that Google must consider the link and thereby increase the ranking of these articles.

Google has every right reason to either consider or ignore any link. But why derank or punish an article when you can just ignore the link and leave the article as it was? It makes no sense at all and it is one of the reasons why Google is biased.

Unfortunately, many sites experience this same thing as me and there is absolutely nothing we can do about Google.

It is important to mention that ‘unnatural’ link building is one of the many other reasons why Google ‘punishes’ a site or an article unfairly.

Potential Solution: It would be better if Google simply ignore some unharmful practices rather than punish a site or article for what they deem wrong.

Final Thought

As we all know, Google is the biggest search engine in the world and it does a great job of providing solutions to billions of queries daily. However, the search engine is far from perfect.

One major reason why Google’s search engine is biased is that it ranks each article based on sitewide ‘authority’ rather than individual content quality. Hence, big websites would always have a better shot at ranking above smaller sites for competitive keywords regardless of the quality of content.